A smaller team of scientists at Xiamen University has expressed alarm at the ease with which terrible actors can now generate fake AI imagery for use in exploration tasks. They have published an opinion piece outlining their problems in the journal Patterns.

When scientists publish their function in set up journals, they frequently contain pictures to present the outcomes of their do the job. But now the integrity of such pictures is below assault by particular entities who would like to circumvent regular exploration protocols. Instead of building photographs of their true work, they can rather make them utilizing synthetic-intelligence programs. Producing pretend photos in this way, the scientists propose, could enable miscreants to publish study papers without the need of accomplishing any actual exploration.

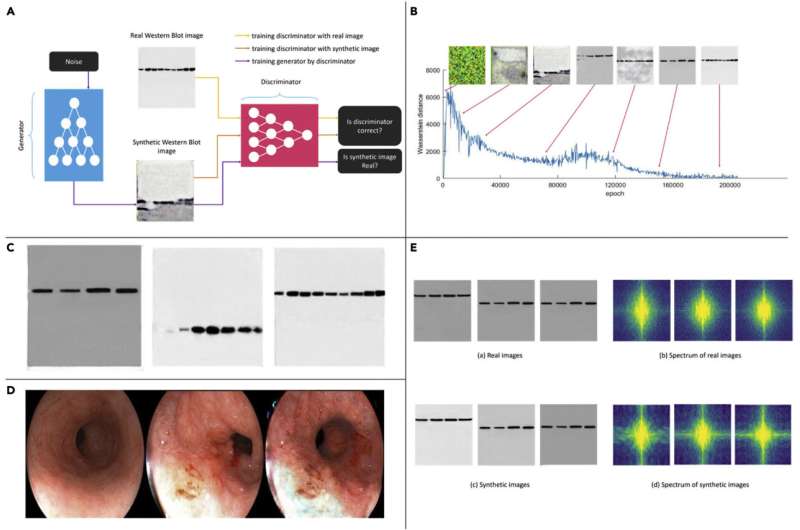

To demonstrate the simplicity with which pretend investigate imagery could be generated, the scientists created some of their personal making use of a generative adversarial community (GAN), in which two programs, one particular a generator, the other a discriminator, endeavor to outcompete one particular yet another in building a ideal graphic. Prior investigate has proven that the method can be utilised to produce photos of strikingly realistic human faces. In their do the job, the scientists created two sorts of visuals. The initially kind had been of a western blot—an imaging strategy made use of for detecting proteins in a blood sample. The next was of esophageal cancer illustrations or photos. The researchers then introduced the visuals they experienced designed to biomedical specialists—two out of a few ended up not able to distinguish them from the serious issue.

The researchers note that it is probably feasible to develop algorithms that can spot this kind of fakes, but accomplishing so would be quit-gap at finest. New technology will probably emerge that could conquer detection application, rendering it worthless. The researchers also observe that GAN application is quickly readily available and simple to use, and has thus very likely now been used in fraudulent investigate papers. They recommend that the remedy lies with the corporations that publish analysis papers. To maintain integrity, publishers need to reduce artificially generated photos from showing up in function printed in their journals.

Detecting fake encounter photos created by equally individuals and devices

Liansheng Wang et al, Deepfakes: A new risk to impression fabrication in scientific publications? Styles (2022). DOI: 10.1016/j.patter.2022.100509

© 2022 Science X Community

Quotation:

Computer system scientists suggest study integrity could be at chance owing to AI produced imagery (2022, May well 25)

retrieved 30 May well 2022

from https://techxplore.com/news/2022-05-scientists-owing-ai-imagery.html

This document is topic to copyright. Aside from any fair working for the reason of non-public analyze or investigate, no

element may perhaps be reproduced with no the prepared permission. The content material is presented for information and facts functions only.

More Stories

The Benefits of Having a Tech Team in Your Business

Stellantis to restructure European dealer network in July 2023 • TechCrunch

SOLID Design Principles in C# with helpful examples